The artificial intelligence revolution is transforming how we work, learn, and interact with technology. But as AI systems become more powerful and widespread, a critical question emerges: what’s the real environmental cost of our digital conversations with machines like ChatGPT?

OpenAI CEO Sam Altman recently made headlines with surprising claims about his company’s resource consumption. According to Altman’s blog post “The Gentle Singularity,” each ChatGPT query uses just one-fifteenth of a teaspoon of water – that’s roughly 0.000085 gallons, or literally just a few drops. He also revealed that the average query consumes about 0.34 watt-hours of electricity, comparable to what an oven uses in just over a second.

These figures paint a remarkably green picture of AI operations. But the story isn’t quite that simple.

The Optimistic Case: AI Getting More Efficient

Altman’s transparency represents a significant step forward in understanding AI’s environmental footprint. For years, tech companies have been secretive about their data center operations, leaving researchers and policymakers to make educated guesses about energy and water consumption.

The numbers Altman shared are genuinely impressive. If accurate, they suggest that OpenAI has made substantial progress in optimizing its systems. The company has also taken steps to make AI more accessible and affordable, slashing o3 pricing by 80 percent for ChatGPT Pro and Teams subscribers.

Altman’s vision extends beyond current efficiency gains. He believes the cost of intelligence will eventually drop to “near the cost of electricity,” as datacenter operations become increasingly automated. This techno-optimistic outlook envisions a future where superintelligence leads to abundant discoveries in the 2030s, making both intelligence and energy “wildly abundant.”

From this perspective, we’re witnessing the early stages of an AI efficiency revolution. Just as computers became exponentially more powerful while consuming less energy per calculation, AI systems might follow a similar trajectory.

The Reality Check: Scale Changes Everything

However, several factors complicate this rosy picture. First, OpenAI hasn’t explained how these water usage figures were calculated, and the methodology matters enormously when assessing environmental impact.

Previous research tells a different story. A 2023 Washington Post investigation found that generating a 100-word email using GPT-4 could use “a little more than one bottle” of water. This stark contrast with Altman’s “few drops” claim highlights how calculations can vary dramatically based on methodology and scope.

The scale issue is perhaps most concerning. While per-query consumption might be minimal, ChatGPT processes over a billion queries daily. Even tiny amounts, when multiplied by billions, become substantial. It’s like saying a single grain of sand is insignificant while ignoring that billions of grains create entire beaches.

Moreover, broader AI energy projections paint a concerning picture. The Lawrence Berkeley National Laboratory estimates that AI-specific data center operations will consume between 165 and 326 terawatt-hours of energy in 2028 – enough to power 22% of all US households. Some researchers warn that AI could surpass Bitcoin mining in power consumption by the end of 2025.

The Cooling Conundrum

AI models like GPT-4 require massive data centers that must be cooled constantly – and that’s where water consumption becomes critical. These facilities often use water-intensive cooling systems, particularly in warmer climates. The location of data centers significantly impacts water usage, with facilities in desert regions potentially consuming far more water per query than those in cooler climates.

This geographic variability makes it challenging to provide universal consumption figures. A query processed in a Seattle data center might indeed use just a few drops of water, while the same query processed in Arizona could require significantly more.

The Transparency Challenge

Critics have raised valid concerns about the lack of detailed methodology behind Altman’s figures. AI expert Gary Marcus has been particularly vocal, drawing unfavorable comparisons to past tech industry oversights. The AI community increasingly calls for greater transparency in environmental reporting, including standardized measurement practices and independent verification.

The politeness factor adds an interesting wrinkle to consumption calculations. Altman previously revealed that user courtesy – saying “please” and “thank you” in queries – has cost OpenAI tens of millions in electricity expenses over time, as these additional words require processing power.

Looking Forward: The Sustainability Question

The central question isn’t whether current AI systems are perfectly efficient, but whether they can scale sustainably. As AI capabilities expand and adoption grows, even modest per-query consumption could aggregate into significant environmental impact.

Several factors will determine AI’s environmental trajectory:

Technological Innovation: Continued improvements in chip efficiency, cooling systems, and model optimization could reduce per-query consumption further.

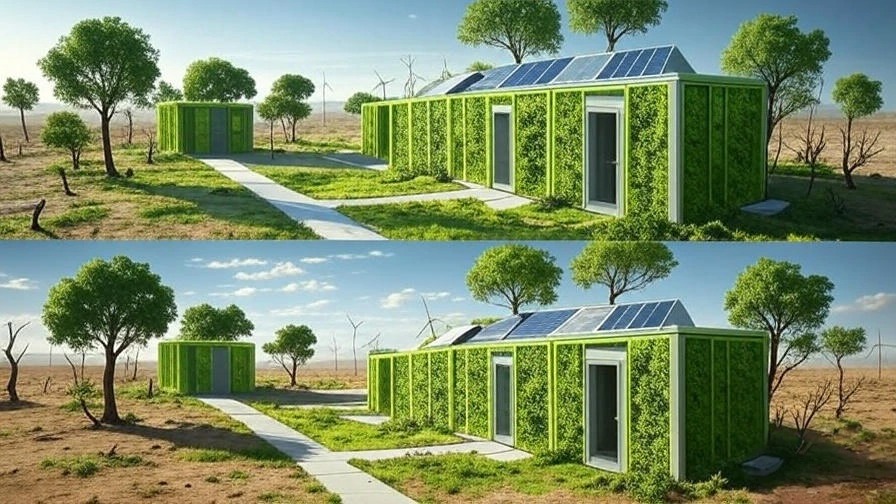

Renewable Energy: The source of electricity matters as much as the amount consumed. AI companies increasingly invest in renewable energy infrastructure.

Regulatory Pressure: Governments worldwide are developing frameworks for AI environmental reporting, potentially mandating greater transparency.

Market Dynamics: Competition and cost pressures naturally drive efficiency improvements.

The Balanced Perspective

Altman’s disclosures represent progress toward transparency, but they shouldn’t end the conversation about AI’s environmental impact. The truth likely lies between the “few drops” optimism and the “water guzzler” pessimism.

What’s certain is that AI’s environmental footprint will largely depend on how the technology scales. If efficiency improvements keep pace with usage growth, AI could remain relatively sustainable. If usage explodes faster than efficiency gains, we could face significant environmental challenges.

The key is maintaining vigilance and demanding continued transparency from AI companies. As AI becomes integral to our digital lives, understanding its true environmental cost becomes essential for making informed decisions about our technological future.

Rather than accepting any single narrative, we should continue asking tough questions, demanding better data, and pushing for innovations that make AI both powerful and sustainable.

Disclaimer: This analysis is for informational purposes only and should not be considered as investment advice. The views expressed are based on publicly available information and current market conditions. Readers should conduct their own research and consult with financial professionals before making any investment decisions related to AI companies or technologies.